Introduction

In my previous blog post on Managed Kubernetes in the Cloud I’ve explained more on the theoretical side of managed Kubernetes services and upgrade strategies. In this post I’ll focus more on the technical side of creating backups with a tool called Velero previously known as Heptio Ark. Velero can be used both on public clouds as well as on-premise clusters. Velero helps you with:

- Taking backups of your cluster and restore in case of loss.

- Migrate cluster resources to other clusters.

- Replicate your production cluster to development and testing clusters.

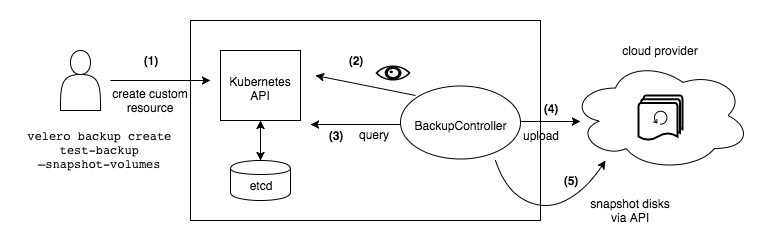

In short what Velero does is creating backups of the clusters key-value store etcd as well as creating snapshots of persistent volumes. Besides that it has much more functionalities like scheduling, filter your backups to certain namespaces etc.

Enough, let’s dive into the technical details!

Prerequisites

For explaining the backup and restore of the cluster with Velero the following tools are used:

- kubectl: 1.18.6 [install kubectl]

- aws cli: 2.0.42 [install aws cli]

- eksctl: 0.20.0 [install eksctl]

Besides these CLI tools I assume you already have a Kubernetes cluster up and running.

For this blog I deployed a Kubernetes cluster version 1.17 with AWS EKS.

Set environment variables

Before we start you need to set the following environment variables which are used in all the cli commands which are executed.

# Setting environment variables # Name of the cluster CLUSTERNAME= # Namespace where Velero will be deployed in NAMESPACE=velero # Name of the service account SERVICEACCOUNT=veleros3 # Name of the bucket where the backups will be stored BUCKET= # Region of the bucket REGION= # AWS CLI Profile PROFILE= # Sets the AWS Account ID AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query "Account" --output text --profile $PROFILE)

Installation of the Velero client

Based on the provided documentation on Velero’s website I’ve installed Velero v1.4.2 on Ubuntu 18 (WSL2) with the following commands. Check for the latest version their github page.

wget https://github.com/vmware-tanzu/velero/releases/download/v1.4.2/velero-v1.4.2-linux-amd64.tar.gz tar -xvf velero-v1.4.2-linux-amd64.tar.gz sudo mv velero-v1.4.0-linux-amd64/velero /usr/local/bin/ velero version --client-only # This will result in: # Client: # Version: v1.4.0 # Git commit: 5963650c9d64643daaf510ef93ac4a36b6483392 # Enable autocompletion echo 'source <(velero completion bash)' >>~/.bashrc sudo velero completion bash >/etc/bash_completion.d/velero

Prepare your AWS environment

After the installation of the Velero CLI client some preparations need to be made on the side of AWS. This consist of the following steps:

- Create a backup location

- Configure the IAM permissions

Create a backup location

For creating the backup location I use the AWS CLI to create a bucket. Off course you can also use an existing bucket instead.

aws s3api create-bucket \ --bucket $BUCKET \ --region $REGION \ --create-bucket-configuration LocationConstraint=$REGION \ --profile $PROFILE

Configure IAM permissions

After the bucket is created, the proper IAM permissions need to created. There are multiple options in providing Velero with the proper access rights. For all the three described options, the same policy will be created however there is difference in the way this policy will be handed over towards Velero.

- AWS User credentials: In this case, you simply create a user, attach the policy and export the users cli credentials (access key and secret access key) and pass these to the Velero server installation. The disadvantage of this option is that you are distributing credentails with the possibility of loosing them, resulting in a security breach.

- AWS Role: Instead of creating a user, you can also create an AWS role with the policy attached and provide the role through annotations with the installation of the Velero server. In this config you allow the service: ec2.amazonaws.com to assume this role. In this case you don’t have the possibility of loosing credentails however other pods running in the cluster could also assume the same role, which is not desired solution.

- AWS IRSA: On september 2019 AWS introduced “Fine grained IAM roles Service accounts” and called it IRSA: IAM Roles for Service Accounts. In this setup, pods are first class citizens in IAM and it is based on the OpenID Connect Identity Provider (OIDC). Instead of the previous AWS Role which can be assumed by all running pods, they’ve made changes in the AWS identity APIs to recognize Kubernetes pods. By combining an OpenID Connect (OIDC) identity provider and Kubernetes service account annotations, you can now use IAM roles at pod level.

In this example we will be using the IAM Roles for Service Accounts.

Create the Velero IAM Policy

This policy allows Velero to store/delete data in S3 and create/delete snapshots in EC2.

cat > velero-policy.json <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ec2:DescribeVolumes",

"ec2:DescribeSnapshots",

"ec2:CreateTags",

"ec2:CreateVolume",

"ec2:CreateSnapshot",

"ec2:DeleteSnapshot"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:DeleteObject",

"s3:PutObject",

"s3:AbortMultipartUpload",

"s3:ListMultipartUploadParts"

],

"Resource": [

"arn:aws:s3:::${BUCKET}/*"

]

},

{

"Effect": "Allow",

"Action": [

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::${BUCKET}"

]

}

]

}

EOF

aws iam create-policy \

--policy-name VeleroBackupPolicy \

--policy-document file://velero-policy.json \

--profile $PROFILE

Create the IAM Role for Service Account (IRSA)

This code first sets the OIDC provider variable which is substituted in the json trust policy.

# Set the OIDC Provider

OIDC_PROVIDER=$(aws eks describe-cluster --name $CLUSTERNAME --query "cluster.identity.oidc.issuer" --profile $PROFILE --output text | sed -e "s/^https:\/\///")

cat > trust.json <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::${AWS_ACCOUNT_ID}:oidc-provider/${OIDC_PROVIDER}"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"${OIDC_PROVIDER}:sub": "system:serviceaccount:velero:veleros3"

}

}

}

]

}

EOF

# Create the role and attach the trust relationship

aws iam create-role --role-name ServiceAccount-Velero-Backup \

--assume-role-policy-document file://trust.json \

--description "Service Account to give Velero the necessary permissions to operate." \

--profile $PROFILE

# Attach the Velero policy to the role.

aws iam attach-role-policy \

--role-name ServiceAccount-Velero-Backup \

--policy-arn arn:aws:iam::$AWS_ACCOUNT_ID:policy/VeleroBackupPolicy \

--profile $PROFILE

# Create the OIDC provider for the cluster

# Once created, this is listed under IAM > Identity Providers

eksctl utils associate-iam-oidc-provider \

--cluster $CLUSTERNAME \

--approve \

--profile $PROFILE

Install Velero Server component

Based on the installation documentation you can either choose to install the server component through the Velero CLI or through a Helm chart. In this example I will use the Helm chart for deploying the component.

Some variables from the values.yaml will be highlighted.

Before you deploy the helm chart you need to make sure that you align the values.yaml with your own cluster configuration.

configuration:

provider: aws

backupStorageLocation:

bucket: fourco-blog-velero-backup

# ...

# The serviceAccount allows the assumption of the role.

serviceAccount:

server:

create: true

name: veleros3

annotations:

eks.amazonaws.com/role-arn: "arn:aws:iam::467456902248:role/ServiceAccount-Velero-Backup"

Make sure you’ve updated the bucket name to the name of your bucket. The serviceAccount.server.name is also referred to from the trust policy: “${OIDC_PROVIDER}:sub”: “system:serviceaccount:velero:veleros3”. Make sure you align the serviceAccount.server.annotation to your own role ARN.

Deploy the server component

# First create the namespace kubectl create namespace $NAMESPACE # Add the chart repositry to Helm helm repo add vmware-tanzu https://vmware-tanzu.github.io/helm-charts # Install the server component helm upgrade --install velero vmware-tanzu/velero \ --namespace $NAMESPACE \ -f values.yaml # Check if the installation is succesfull kubectl get all -n velero # NAME READY STATUS RESTARTS AGE # pod/velero-65c46dd69-jc84c 1/1 Running 0 4h4m # NAME READY UP-TO-DATE AVAILABLE AGE # deployment.apps/velero 1/1 1 1 4h4m # NAME DESIRED CURRENT READY AGE # replicaset.apps/velero-65c46dd69 1 1 1 4h4m # Analyze the log for errors. kubectl logs -f -n velero deployments/velero

After this Velero is ready to create backups, however at this moment I’m still running an entire empty cluster. Therefore I simply deploy WordPress.

Deploy WordPress

For testing purposes I simply deployed a WordPress application with Helm. For more information on the parameters and possibilities take a look at the github page as this is out of scope for this blog.

kubectl create ns wordpress helm repo add bitnami https://charts.bitnami.com/bitnami helm install my-release bitnami/wordpress -n wordpress

After I’ve installed the cluster I obtained the admin password and created the following blog page titled: “Velero backup”.

Create a Velero backup

Now that we have some data in our cluster and have persistent volumes, we are going to create a backup.

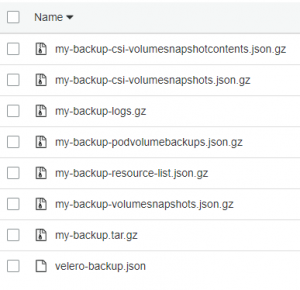

# We name the backup 'my-backup' # In this example we create a backup of the namespace wordpress # See for more options: velero backup create --help velero backup create my-backup --include-namespaces wordpress # Describe gives you information velero backup describe my-backup # --detailed gives you even more details velero backup describe my-backup --details velero backup get # NAME STATUS CREATED EXPIRES STORAGE LOCATION SELECTOR # my-backup Completed 2020-09-01 09:29:27 +0200 CEST 29d default <none>

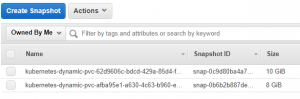

After the backup is created I deleted my entire cluster, leaving behind only the Velero s3 bucket containing the backup as well as the snaphots of the two persistent volumes created by the WordPress installation.

Due to the fact that the OIDC Identity Provider changes for a new cluster, I also deleted the IAM role with the code below.

aws iam detach-role-policy \ --role-name ServiceAccount-Velero-Backup \ --policy-arn arn:aws:iam::$AWS_ACCOUNT_ID:policy/VeleroBackupPolicy \ --profile $PROFILE # Delete the IAM ROLE (IRSA) aws iam delete-role \ --role-name ServiceAccount-Velero-Backup \ --profile $PROFILE

Restore the cluster

Once an entire new cluster is up and running, you first need to re-create the IAM Role and trust policy. The trust relationship has been changed because of a different OIDC provider for the new cluster.

Execute the steps described at: Create the IAM Role for Service Account (IRSA)

After creation of the IAM role, deploy the Velero server component again. Deploying the Velero server component is the exact same deployment as done above. We keep the values.yaml exactly the same so it refers to the existing s3 bucket which consist of the backup.

kubectl create ns $NAMESPACE helm repo add vmware-tanzu https://vmware-tanzu.github.io/helm-charts helm upgrade --install velero vmware-tanzu/velero \ --namespace $NAMESPACE \ -f values.yaml kubectl logs -f -n velero deployment/velero

Once Velero server component is running smoothly we can execute the restore based on the existing backup

velero backup get # NAME STATUS CREATED EXPIRES STORAGE LOCATION SELECTOR # my-backup Completed 2020-09-01 09:29:27 +0200 CEST 29d default <none> # velero backup describe my-backup --details # If you don't limit the restore to a namespace it will also try to restore kube-system resources and aws-auth etc. # Velero sees that there are newer versions of these resources, so they remain untouched but the restore status will be Partially Failed. velero restore create --from-backup my-backup --include-namespaces wordpress Restore request "my-backup-20200901093543" submitted successfully. Run `velero restore describe my-backup-20200901093543` or `velero restore logs my-backup-20200901093543` for more details. velero restore describe my-backup-20200901093543

After the restore Velero creates the volumes again based on the earlier created snapshots and restores all Kubernetes resources. After the restore is succeeded a new loadbalancer is created and attached to the cluster, resulting in the exact same result as in the beginning.

Cleanup

After the creation of role, policies etc, we also want to cleanup the environment after the test we executed. The following code removes the created resources:

# Delete the helm chart helm delete velero -n velero helm delete my-release -n wordpress kubectl delete ns velero kubectl delete ns wordpress aws iam detach-role-policy \ --role-name ServiceAccount-Velero-Backup \ --policy-arn arn:aws:iam::$AWS_ACCOUNT_ID:policy/VeleroBackupPolicy \ --profile $PROFILE # Delete the IAM ROLE (IRSA) aws iam delete-role \ --role-name ServiceAccount-Velero-Backup \ --profile $PROFILE # Delete the Velero Policy aws iam delete-policy \ --policy-arn arn:aws:iam::$AWS_ACCOUNT_ID:policy/VeleroBackupPolicy \ --profile $PROFILE # Delete the bucket with the backups aws s3 rb s3://$BUCKET --force --profile $PROFILE # Delete the Snapshots snapshots=$(aws ec2 describe-snapshots \ --owner-ids self \ --filters Name=tag:velero.io/backup,Values=my-backup \ --query "Snapshots[*].[SnapshotId]" \ --profile $PROFILE \ --output text) for snap in $snapshots; do aws ec2 delete-snapshot --snapshot-id $snap --profile $PROFILE done

Creating periodic backups

In this blog post we created a one-time manual backup. It is also possible to create periodic backups with Velero by configuring a ‘schedule’.

# See all options velero create schedule --help # Some examples from the help menu are: # Create a backup every 6 hours velero create schedule NAME --schedule="0 */6 * * *" # Create a daily backup of the web namespace velero create schedule NAME --schedule="@every 24h" --include-namespaces web # Create a weekly backup, each living for 90 days (2160 hours) velero create schedule NAME --schedule="@every 168h" --ttl 2160h0m0s

Conclusion

Either if you are running your cluster on AWS, Google Cloud or Azure, Velero is a helpfull tool in the creation of cluster resource backups and persistent volumes. However before you start using this tool in production clusters make sure you have invested some time experimenting with the tool and gaining some experience.